Filter bubbles

In the ongoing media post-mortem of the 2016 US presidential election, a number of news outlets have questioned the role of social media in determining public opinion. The Guardian exposed people to ideologically opposed Facebook news feeds, and Buzzfeed aired an analysis of specific Facebook features they believe have outsized political impact. These and other recent articles are in whole or in part inspired by the idea of “filter bubbles,” or the notion that people are guided into ideological cul-de-sacs by the well-meaning but ultimately harmful algorithms that decide what we see and don’t see on Facebook, Twitter, etc. This idea was popularized by Eli Pariser, author of the book The Filter Bubble: What the Internet is Hiding From You, and co-founder of reliably cringe-inducing clickbait factory Upworthy.

In an echo chamber, exposure to ideologically divergent views is limited by near-complete homogeneity. You can’t absorb an idea if nobody ever brings it to the table. Filter bubbles are different—in a filter bubble, what you see is controlled by an algorithm that tracks your behavior, and thereby your ideological position. The worry is if I only “like” ideologically conservative content, the Facebook news feed algorithm will give me more content it thinks I will “like,” limiting my exposure to an ideologically heterogeneous world.

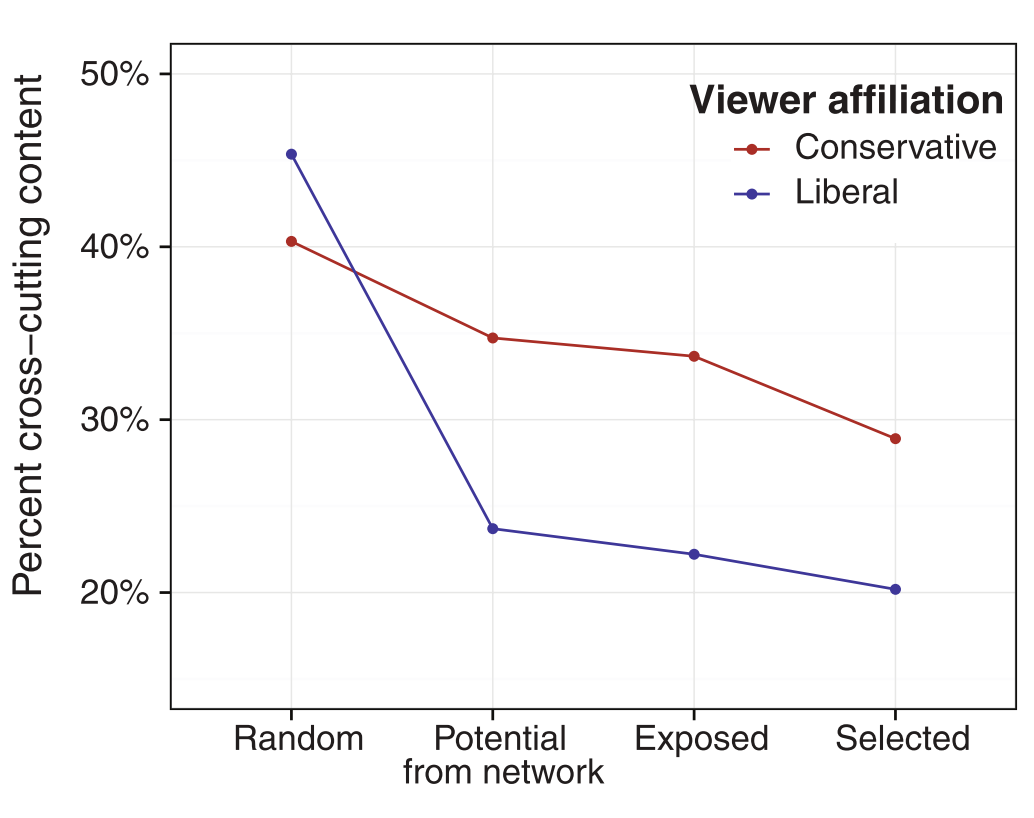

Researchers at Facebook and the School of Information at the University of Michigan undertook a large study of Facebook users (n = 10.1 million) to assess whether the Facebook news feed algorithm by its very nature limited exposure to “ideologically discordant” or “cross-cutting” content (Bakshy, Messing, & Adamic, 2015). They identify three progressive stages where exposure to such content can be limited: first, people in your social network can fail to share it; second, the news feed algorithm can hide it; third, you can fail to click on it when it appears in your news feed. As a basis for comparison, the authors measure how much cross-cutting content people would be exposed to if their friend networks were random, rather than selected at least partially on the basis of ideological similarity.

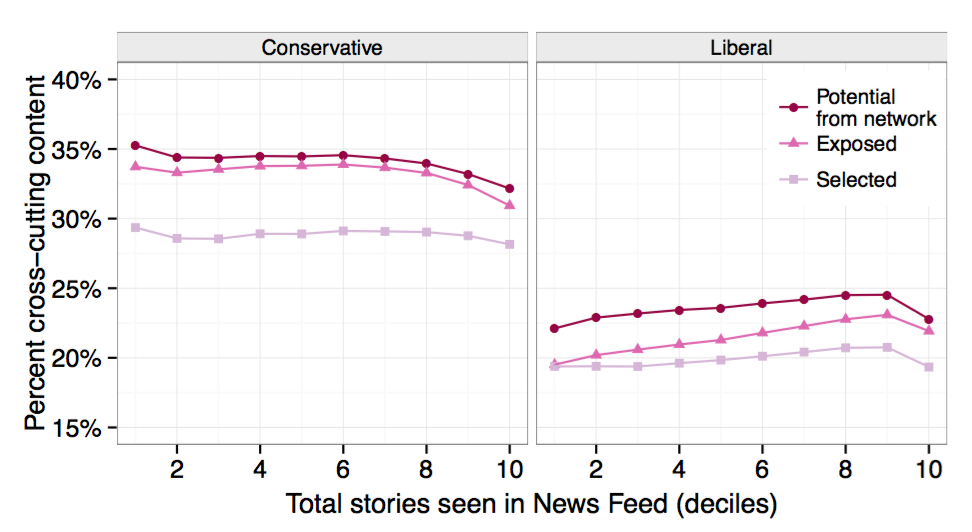

Drops in the level of cross-cutting content at two of these stages could show a filter bubble effect: the move from potential to actual exposure, and the move from exposure to selection (or clicking). The latter matters for filter bubbles because articles that appear higher in the feed are clicked on more frequently, and an algorithm determines this position. The results do show a small filter bubble effect: the news feed hides some cross-cutting content from liberal and conservative users completely, and shifts some down the timeline. These effects are small. Without a filter, liberal feeds have 24% cross-cutting content on average, and conservative feeds have 35%. With the filter, these figures drop to 19% and 33%, respectively. Another way of looking at it: Facebook users actually do see most of the cross-cutting content shared by their network, but because of how we pick our friends, there’s not a lot of cross-cutting content in our networks to begin with.

Recent reporting on fake news found it’s largely targeted toward conservatives. Are conservatives also more affected by filter bubbles? Compared to randomly selected social networks, both liberals and conservatives have substantially less potential exposure to cross-cutting content. Perhaps surprisingly, users that identify as conservative are both exposed to and actually click on a larger percentage of cross-cutting content than liberals. And self-identified liberals have much more ideologically homogeneous networks than conservatives. So if anybody is living in a filter bubble, it’s liberals.

There are a number of missing pieces that make these findings hard to interpret. Is this small filter bubble effect nevertheless big enough to shift political outcomes? (If so, all things being equal, we should expect more conservatives to be pulled out of their bubbles than liberals.) How strong a filter bubble do we want in the first place, and what would our ideal filter bubble look like? What kinds of trades are we willing to make to increase ideological heterogeneity in our social networks? (What if for every two friends you added on Facebook you were forced to connect with a random person from outside your social network? Would that be too much? Too little? The more I think about this the more it reminds me of false notions of “balance” in reporting: for every movie I watch about an old man who knits hats for prematurely born children on Upworthy, should I be required to watch an anime racist on YouTube deliver a lecture on the Clinton body count?) What is the effect of exposure to cross-cutting content in the first place? Does exposure cause us to reconsider our beliefs, or do we react by bolstering our preexisting point of view? (Do we gleefully “hate watch” cross-cutting news like a hilariously bad movie?) This research focused on “hard news”—but to what extent can reading “hard news” change our beliefs?

I suspect one of the reasons that filter bubbles, echo chambers, and fake news are getting so much air time right now is because these explanations point to relatively simple moral failings and possible solutions: Facebook fails to accurately represent the heterogeneity of our social networks, Facebook fails to identify fake news, and so on. If this is the case, we are blameless, and Facebook could change their algorithms, either voluntarily or because of legal regulation, and thus dramatically improve our political situation. The alternative is scarier: echo chambers, filter bubbles, and fake news are relatively minor problems that expose preexisting cracks in our civic fundament: the tendency to associate with people who look like we do, or who think like we do; the fragmentation of the attention economy and the difficulty of identifying and interpreting facts; the overall low quality of “real” news; racism; apathy; disenfranchisement; economic structures that reinforce isolation; the potential impact of changing social norms; and so on. It is easier to blame Facebook than to turn our attention and energy toward social forces that emerge from teeming interpersonal flows.

Bibliography:

Bakshy, E., Messing, S., & Adamic, L. A. (2015). Exposure to ideologically diverse news and opinion on Facebook. Science, 348(6239), 1130–1133. http://doi.org/10.1126/science.aaa1160