Online echo chambers

In the wake of Trump’s election, many of my friends have raised the question of whether social media sites like Facebook and Twitter and search engines such as Google have exacerbated existing political divisions by creating “echo chambers” and “filter bubbles.” Today Mark Zuckerberg, CEO of Facebook, defended the company’s news feed algorithms, making the case that the proliferation of fake news articles was not a problem, and emphasizing the necessity of not introducing bias into the system. This sidesteps a perhaps larger concern: that even a “neutral" site structure can allow users to create strongly biased echo chambers that reinforce partisan animus.

In this post I review Flaxman, Goel, & Rao (2016), an impressive paper by researchers at Oxford, Stanford, and Microsoft Research that examines internet news reading behavior. The paper directly addresses whether or not social media and search engines contribute to an echo chamber effect, where users are overexposed to content reinforcing their political beliefs, and underexposed to content offering diverging perspectives.

Data set and study limitations

The authors collected web browsing time series data during a three month period in 2013 from 50,383 people who actively read the news. These users were drawn from a larger sample of 1.2 million US users of the Bing Toolbar, a plugin for Internet Explorer. The sample was cut down to size by (1) removing participants who viewed less than 10 news articles, and (2) removing participants who viewed less than 2 opinion pieces. After (1), they had 173,450 people, and after (2) they were left with the final count of 50,383, or ~4% of the sample. It is striking that out of 1.2 million users, at most ~14% actively read more than 3 news articles per month. Off the bat, this suggests that any echo chamber effects on political thought and action are likely to be much smaller than the effect of overall disengagement with news.

The data were further limited to the top 100 websites visited by users that were classified as news, politics/news, politics/media, and regional/news on the Open Directory Project. Highlighting the low overall level of engagement with news, the authors note that only 1 in 300 outbound clicks from Facebook “correspond to substantive news,” based on their criteria. Most clicks instead go to “video- and photo-sharing sites,” e.g., YouTube, Instagram, etc. This strikes me as a blind spot, as these “sharing" sites are home to a wide variety of political content. It’s also important to note that the data contained only clicked links and typed website addresses—“likes,” “favorites,” “shares,” “retweets,” and other site-specific actions that reflect political thought were not tracked. This study gives us no way to evaluate echo chamber effects on the thought and action of the vast majority of internet users, who avoid the news and stick to social and sharing sites. However, if regular newsreaders are also more likely to vote (which seems plausible to me), assessing the strength of an echo chamber effect on this group seems prudent.

Measuring political slant

The political slant of news sites was calculated in terms of their “conservative share,” or the fraction of each site’s readership that originated from IP addresses in zip codes that voted Republican vs. Democratic in the 2012 presidential election. These zip code level data were weighted by the percentage of actual Republican vs. Democratic voters. The rank ordering of news sites by conservative share (in the supplementary materials) is interesting in itself. Many of the most ideologically polarized sites are local news, e.g., the Orange County Register (conservative share = .15) and the Knoxville News Sentinel (conservative share = .96). This could be indirect evidence for the “big sort” hypothesis, that people are relocating to areas with others who share their political views.

Sites on equal but opposite sides of the 50% conservative share mark do not strike me as having equal but opposite biases in their coverage. For example, the BBC (which reads to me as centrist/liberal—certainly not leftist) has a conservative share of .3, whereas its conservative-share opposite at .7 is Breitbart (which I consider to be on the far right). Sites with conservative shares greater than .7 are mostly local news, with the exception of Topix at .96.

To evaluate the strength of echo chamber effects, the authors ask two key questions. First, how ideologically polarized are news readers? And second, do users read a range of news across the ideological spectrum, or do they stick to a narrow band reflecting a single ideology?

How polarized are news readers?

The authors assess polarization by defining “ideological segregation" as the expected value of the difference in polarity scores between two randomly selected users. The polarity score of an individual is the mean of conservative shares across their visited news sites, estimated using a fancy hierarchical model. Approximately 66% of users had a polarity score between .41 and .54—i.e., they are boring centrists that get their news from e.g., USA today (conservative share = .47). Segregation across the user population is .11, which the authors note corresponds to the difference in polarity between e.g., ABC (conservative share = .48) and Fox News (conservative share = .59).

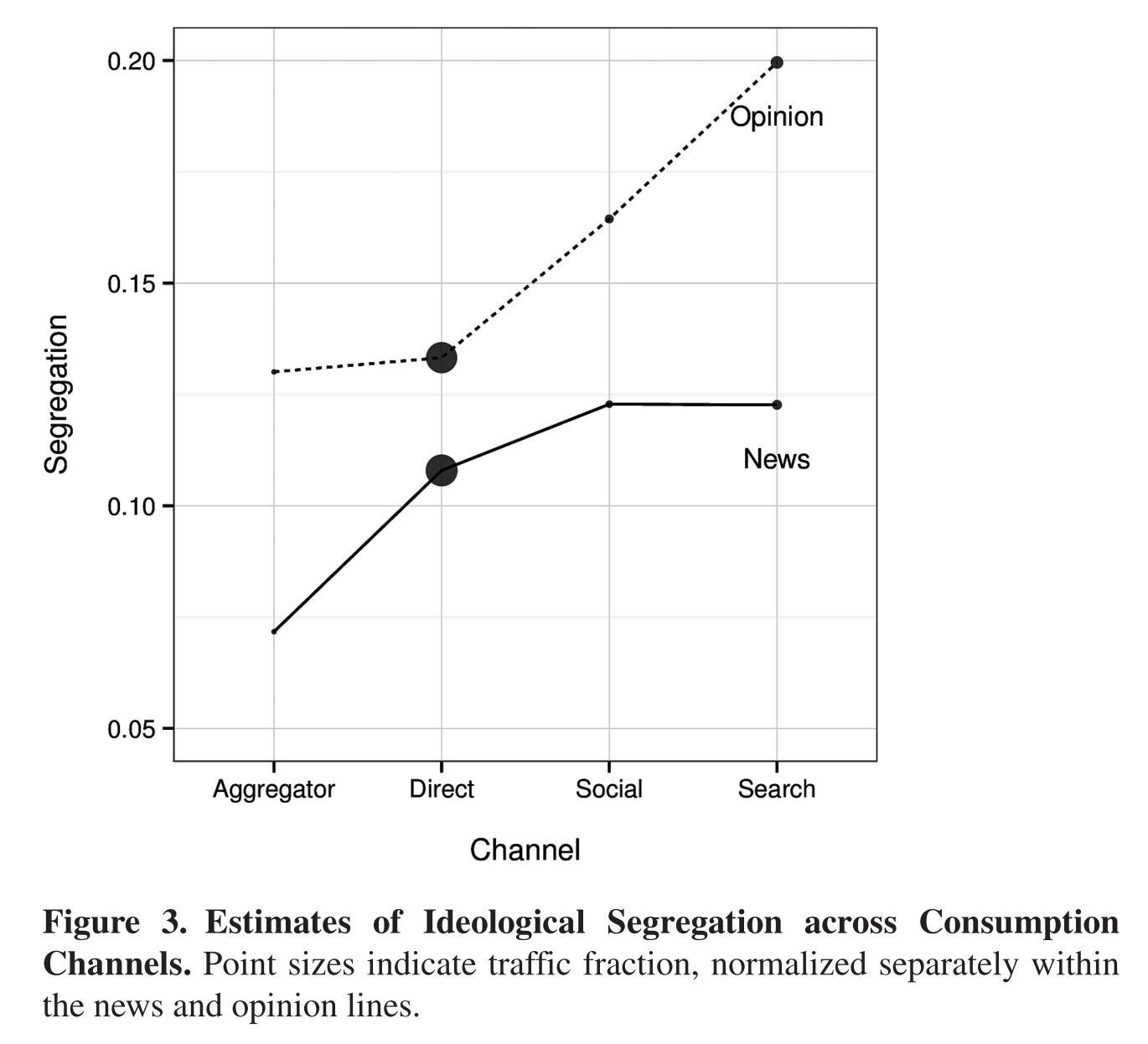

One way to look for an echo chamber effect is to ask if social media and search behavior show greater segregation than other kinds of browsing. The authors assess this by splitting user activity into categories based on the type of site used to find news, and whether the news is identified as an opinion piece (using a fancy corpus-based binary classifier). The types of browsing activity (or “channels”) identified by the authors are: aggregators (e.g., Google News), direct (typing a URL into the browser), social (e.g., Facebook), and search (e.g., Google, Bing). Segregation was higher for opinion pieces regardless of site type. Segregation was also higher for social and search than direct traffic, and quite a bit lower for aggregators, consistent with a small but reliable echo chamber effect.

Directly entering a URL into the browser accounts for 67% of opinion traffic and 79% of news traffic. This is followed by search (opinion: 23%, news: 14%), social (10% opinion, 6% news), and aggregators (.4%). From this study alone we can’t know how users develop their direct browsing habits—it’s plausible that direct browsing choices are influenced by social media and search browsing, but it’s hard to know from this study alone.

How much segregation is healthy? The highest segregation value reported is .2, for users that find opinion articles using search, which corresponds to the distance between The Daily Kos (conservative share = .39) and Fox News (conservative share = .59). The authors note that this difference “represents meaningful differences in coverage,” and is “within the mainstream political spectrum.” In the wake of Trump’s election, this is a cold comfort. A controlled experiment would manipulate ideological segregation across a set of polities on equal political and cultural footing and observe the results: presumably the rate of protests, coups, uprisings, and so on, would be affected. A correlational approach would observe browsing habits across a range of polities, but would need to contend with confounding inter-polity differences in political structure and culture.

How big is the ideological range of the average user?

Do users get their news from a variety of sources with different political slants, or do they stick to reading a limited number of sources in a small ideological range? The authors calculate the “isolation" of each user by estimating the standard deviation of the estimated conservative share of the sites they read. They find that users are highly isolated, rarely reading news with conservative shares further than ±.06 from their average. For example, a centrist who typically reads NBC (conservative share = .5) will only rarely read news sources with conservative shares lower than .44 or higher than .56—excluding sites such as CNN (conservative share = .42), the New York Times (conservative share = .31), and Fox News (conservative share = .59). Recapitulating the theme of general disengagement, this isolation is because 78% of users get the majority of their news from a single site, and 94% get the majority of their news from two sites at most.

Isolation varies with polarity, where users who stick to sites with a conservative share around .5 are the most isolated (~.06), those who read sites with conservative share less than .3 are slightly less isolated (~.08), and those who read sites with conservative share greater than .7 are the least isolated (~.19). However, as observed before, the linear conservative share spectrum does not correspond to a linear ideological spectrum—sites on opposite sides of the 50% share line are not equally biased in opposite directions. What we really want to know is how frequently users read content from news sources that are meaningfully ideologically different from their regular news.

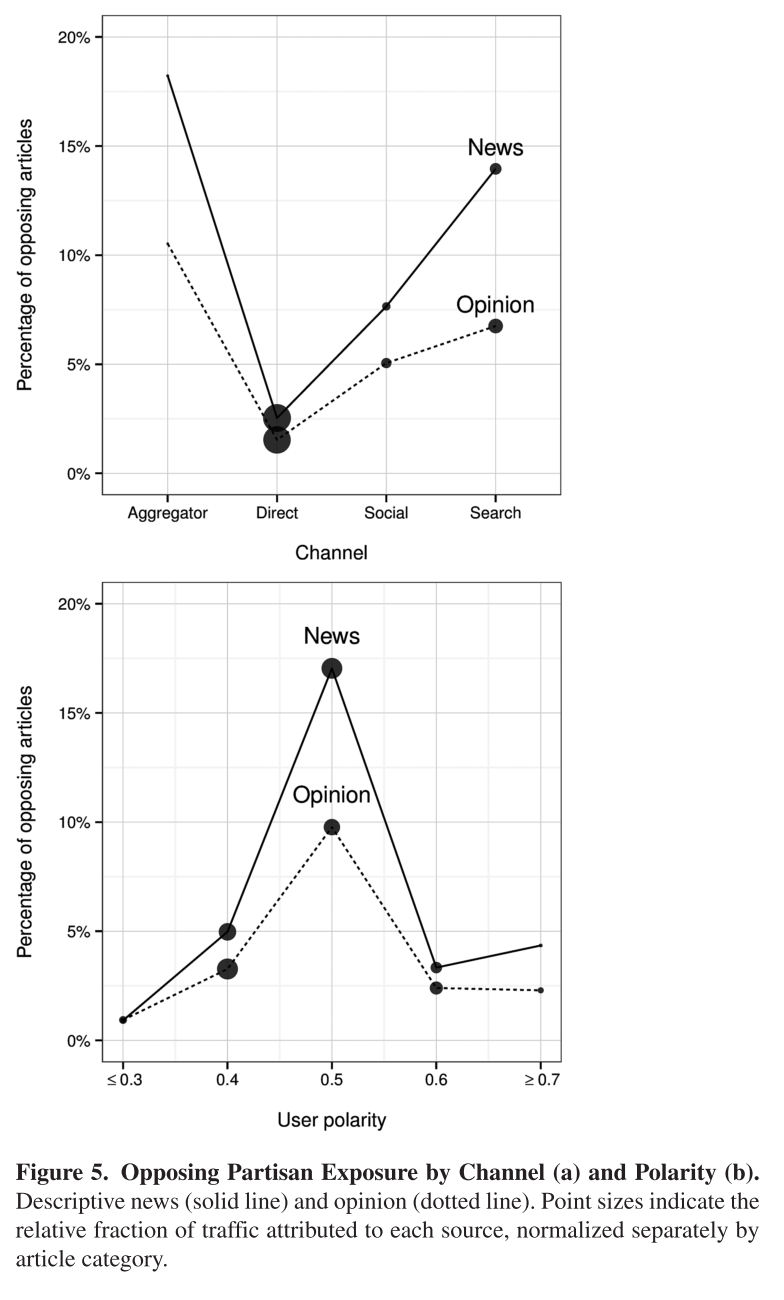

In general, people stay in their ideological comfort zone, and more so for partisans on both sides than for centrists. However, as mentioned above, centrists have the most narrow ideological bandwidth, so although they are willing to cross the aisle, they are not doing so by reading far left or far right news sources. At the extremes, highly polarized users get between 1% and 4% of their news from ideologically opposed sources. Users arrive at opposing articles more frequently using aggregators, social media, and search, versus direct browsing, which results in almost no exposure to opposing views. The effect of social media and search on direct browsing choices remains unclear. These results are hard to interpret and, as with the results concerning ideological segregation, they raise a normative question: how many ideologically opposing articles should people read?

Discussion

Social media and search activity show small but meaningful echo chamber effects for regular news readers. At the same time, social media and search expose people to a larger share of ideologically opposing news articles than direct browsing. These effects are absolutely dwarfed by two larger effects: (1) the vast majority of internet users do not read the news, and (2) the vast majority of those who do read the news use one or two websites at most, which they browse to directly, without social media or search as an intermediary.

This research should not be read as disconfirming the importance of echo chamber effects. Behavior on social media and sharing sites, including “likes,” “shares,” “favorites,” and so on are not in the scope of the analysis, excluding the vast majority of politically relevant internet behavior. It is possible that there are profound echo chamber effects that cannot be detected based on data from the URL bar alone.

Further, though it emphasizes the dominance of direct browsing, this study offers no information about how direct browsing habits are influenced by previous experiences using social media and search. We know that individual users have extremely narrow ideological bandwidth—but would this be the case if the internet or social media were structured in a different way? If, instead of allowing users to choose who they are friends with on Facebook, what if everyone you conversed with in your day-to-day life were automatically added to your friends list? Would this increase or decrease ideological bandwidth? What if web browsers loaded a bipartisan news aggregator in new windows by default? Other factors also undoubtedly affect ideological bandwidth, and may contribute to echo chamber effects, including television watching, education, religion, the “big sort,” and so on.

Investigation of echo chamber effects raises important normative questions. How much ideologically opposing news material should people read? Do social media sites and search engines have a moral responsibility to create ideological balance? Or do they have a higher responsibility to the truth, independent of political ideology? Making the case that social and search companies should change their algorithms requires answers to some of these normative questions, as well as additional research tying a broader range of user actions (e.g., “likes” and “shares”) to important political outcomes (e.g., voting likelihood, discriminatory behavior, etc).

Bibliography

Flaxman, S. R., Goel, S., & Rao, J. M. (2016). Filter Bubbles, Echo Chambers, and Online News Consumption. Public Opinion Quarterly, 80, 298–320. http://doi.org/10.1093/poq/nfw006